DeepSeek has achieved what many thought impossible—training a top-tier AI model for just £4.4 million ($5.6 million), while other companies spend between £78 million and £780 million ($100 million to $1 billion) on comparable models.

This artificial intelligence breakthrough isn’t just about cost savings. DeepSeek’s latest R1 model has surged into the global top 10 in performance, outperforming OpenAI’s o1 on several math and reasoning benchmarks. This achievement is even more remarkable because it requires only one-tenth of the computing power compared to Meta’s Llama 3.1 model.

With 109,000 downloads on HuggingFace and API costs significantly lower than competitors – £0.11 ($0.14) per million input tokens compared to OpenAI’s £11.8 ($15) – DeepSeek is rapidly reshaping the AI landscape. This guide explores how this emerging player challenges industry giants and what it means for developers and businesses looking to implement AI.

What is DeepSeek: Understanding the AI Breakthrough

Founded in May 2023 by hedge fund manager Liang Wenfeng, DeepSeek emerged from Hangzhou with a distinctive approach to AI development. The company primarily recruits young, talented graduates from top Chinese universities, fostering an environment where technical prowess takes precedence over traditional work experience.

Origins and Development History

Their journey began with the release of DeepSeek Coder in November 2023, followed by a 67B parameter language model. The company’s rapid advancement continued with DeepSeek-V2 in May 2024, which triggered substantial market changes in Chinese AI pricing. Furthermore, the release of DeepSeek-Coder-V2, featuring 236 billion parameters and a context length of up to 128K tokens, marked another significant milestone.

Core Technology Overview

At the heart of DeepSeek’s innovation lies its 671-billion parameter Mixture-of-Experts (MoE) architecture, which activates only 37 billion parameters per token. The system employs groundbreaking technologies, including Multi-head Latent Attention (MLA) and advanced distillation techniques. Essentially, these innovations enable the model to:

- Process data efficiently through optimised parameter usage

- Generate rich media content with multimodal capabilities

- Operate effectively on edge devices with limited computing power

Key Differentiators from Other AI Models

It stands apart through its remarkable cost efficiency, with merely AUD 8.53 million in training costs. This achievement becomes particularly striking when considering that OpenAI reportedly spent AUD 152.90 million to train its GPT-4 model. DeepSeek’s API pricing structure also offers significant advantages, charging just AUD 0.21 per million input tokens and AUD 0.43 per million output tokens.

The company’s commitment to open-source development has earned considerable goodwill within the global AI research community. This approach democratises access to advanced AI technologies and encourages thorough scrutiny of potential biases and ethical issues. Through its innovative engineering techniques and efficient resource utilisation, it has demonstrated that superior performance need not depend solely on massive computational resources.

Technical Architecture Deep Dive

The architectural foundation of DeepSeek rests upon a sophisticated Mixture-of-Experts (MoE) system, containing 671 billion parameters whilst activating merely 37 billion for each token. This selective activation mechanism forms the cornerstone of its remarkable efficiency.

Model Architecture and Innovation

The core of DeepSeek’s design lies in the Multi-head Latent Attention (MLA) mechanism, which introduces low-rank joint compression for attention keys and values. The model employs eight of 256 experts for specific inputs and a shared expert that processes all inputs. This innovative approach enables DeepSeek to maintain high attention quality whilst minimising memory overhead during inference.

Efficiency Optimisation Techniques

The model’s efficiency stems from several groundbreaking optimisations. Primarily, DeepSeek utilises an FP8 mixed precision training framework, which substantially reduces GPU memory usage. Through the DualPipe algorithm, the system achieves near-perfect computation-communication overlap, enabling seamless scaling across multiple nodes. Consequently, the entire pre-training process required merely 2.79 million GPU hours – less than one-tenth of the time needed for training comparable models.

Performance Benchmarks and Metrics

DeepSeek’s performance across various benchmarks underscores its technical prowess:

- Mathematical Reasoning: Achieved 97.3% on MATH-500, surpassing OpenAI o1’s 96.4%

- Programming Capabilities: Reached the 96.3 percentile on Codeforces

- General Knowledge: Scored 90.8% on MMLU and 71.5% on GPQA Diamond

The model excels explicitly in software engineering tasks, scoring 49.2% on SWE-bench Verified, marginally ahead of OpenAI o1’s 48.9%. Moreover, its performance extends to advanced mathematical reasoning, demonstrated by a 79.8% score on AIME 2024, outperforming OpenAI o1’s 79.2%.

Through these architectural innovations and optimisations, DeepSeek has established itself as a formidable presence in artificial intelligence, delivering exceptional performance while maintaining remarkable computational efficiency.

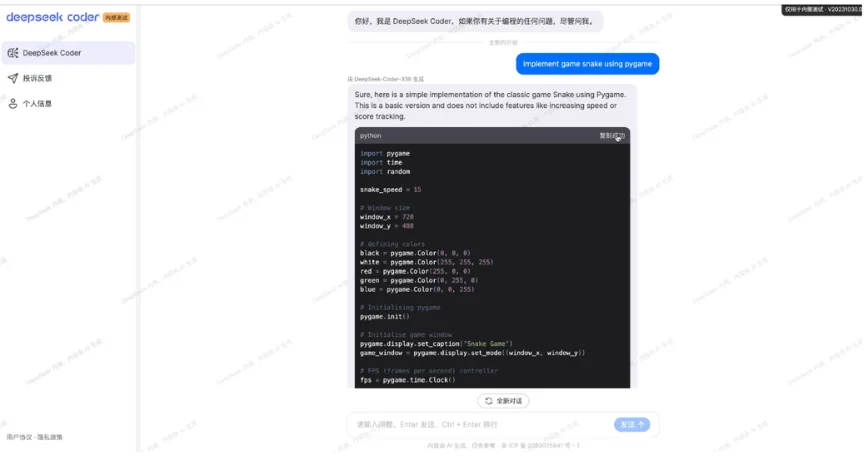

DeepSeek Coder and Development Tools

Trained on an extensive dataset of 2 trillion tokens, DeepSeek-Coder stands out with its unique composition of 87% code and 13% natural language content in both English and Chinese.

Features and Capabilities

The model series has multiple versions for different computational needs, ranging from 1.3B to 33B parameters. Primarily designed for project-level code operations, it supports a 16K window size, enabling comprehensive code completion and infilling tasks.

DeepSeek Coder’s performance metrics showcase its prowess, surpassing existing open-source code models. The 33B version leads CodeLlama-34B by 7.9% on HumanEval Python, 9.3% on HumanEval Multilingual, 10.8% on MBPP, and 5.9% on DS-1000. Rather remarkably, the 7B version matches the performance of CodeLlama-34B.

Implementation Examples

The model excels in various coding scenarios. Generally, it handles code completion tasks with precision, offering context-aware suggestions that maintain project coherence. It analyses error messages for debugging purposes and provides detailed explanations and corrected code snippets.

One of its standout features involves unit test generation. Given sufficient context about a class or function, the model can generate comprehensive test cases that verify functionality across different scenarios. Furthermore, it supports fill-in-the-middle code completion, allowing developers to specify placeholders within existing code for targeted completions.

Best Practises and Usage Guidelines

Developers should provide clear, specific prompts when working with the Coder for optimal results. The model supports over 338 programming languages in its latest version, substantially increasing from the previous 86 languages.

The system offers flexibility in deployment options. Accordingly, users can access the model through:

- Direct integration via Hugging Face’s transformers library

- DeepSeek’s paid API service

- Online chat interface with built-in code execution capabilities

Overall, the Coder is committed to open-source principles, allowing research and commercial applications. Nevertheless, users should note that while the model excels at code generation and completion tasks, it should be used as an assistant rather than a replacement for human programming expertise.

How to Use DeepSeek API

Accessing DeepSeek’s API requires a straightforward authentication process through their official platform.

API Setup and Authentication

To obtain their API key, users must visit the DeepSeek homepage and create an account. Upon accessing the platform, developers can generate their authentication token through the API Keys section in the dashboard. The base URL for all API requests is https://api.deepseek.com, with an option to use https://api.deepseek.com/v1 for OpenAI compatibility.

DeepSeek Coder

The implementation process follows an OpenAI-compatible format, making it accessible for developers familiar with similar APIs. Here’s a basic Python implementation for code generation:

import requests

import json

API_KEY = ‘your_api_key’

API_URL = ‘https://api.deepseek.com/chat/completions’ headers = {

‘Content-Type’: ‘application/json’,

‘Authorisation’: f’Bearer {API_KEY}’

}

data ={

‘model’: ‘deepseek-chat’,

‘messages’: [

{‘role’: ‘system’, ‘content’: ‘You are a helpful assistant’},

{‘role’: ‘user’, ‘content’: ‘Write a factorial function’}

],

‘stream’: False

}

Integration Guidelines

For optimal integration, developers should first review the comprehensive API documentation. The system supports both streaming and non-streaming responses, offering flexibility in implementation. The API supports multiple programming languages, with detailed examples available for Python, Node.js, and cURL implementations.

Cost Structure and Pricing

The pricing structure offers competitive rates compared to other AI services. Input tokens with cache miss are priced at AUD 0.84 per million tokens. Simultaneously, cache hits reduce costs to AUD 0.21 per million tokens, whilst output tokens are charged at AUD 3.35 per million tokens.

Given these points, DeepSeek’s API presents a cost-effective solution instead of OpenAI’s pricing of AUD 11.47 per million tokens for equivalent services. The platform incorporates an intelligent caching system that can lead to up to 90% cost savings for repeated queries. At first glance, this might seem complex, yet the system automatically manages caching without additional configuration requirements, reducing costs and latency for frequently used prompts.

Conclusion – What is Deepseek?

DeepSeek is a remarkable example of efficient AI development, proving that exceptional performance must not depend on massive budgets. Their achievement of training a top-tier model for £4.4 million while maintaining competitive performance against industry giants demonstrates the potential for cost-effective AI development. The company’s innovative Mixture-of-Experts architecture, coupled with Multi-head Latent Attention, has enabled significant computational efficiency gains without sacrificing capability.

The success of the Coder, particularly its ability to outperform established models across various benchmarks, highlights the practical applications of these technological advances. Through their competitive API pricing structure and open-source approach, they have created an accessible pathway for developers and businesses to harness advanced AI capabilities.

This combination of technical excellence and cost efficiency positions them as a significant force in reshaping AI development standards. Their approach suggests a future where advanced AI capabilities become more accessible to a broader range of organisations rather than exclusive to tech giants with enormous resources.

What is Deepseek used for?

It is an AI model used for natural language processing (NLP) tasks such as text generation, conversation, content creation, and code generation. It’s designed to provide responses similar to ChatGPT and is gaining attention for its advanced AI capabilities.

Is Deepseek a threat to Nvidia?

No, it is not a direct threat to Nvidia. It focuses on AI models and NLP technologies, while Nvidia specializes in hardware, particularly GPUs, which are important for training and running AI models. However, if DeepSeek’s AI models become highly optimized and reduce dependence on hardware, it could indirectly affect Nvidia’s market.

Is Deepseek Chatgpt?

No, it is not ChatGPT. They are both AI language models, but they are developed by different organizations. ChatGPT is created by OpenAI, while DeepSeek is an independent AI platform with its own unique algorithms and architecture.

Is Deepseek a chinese company?

Yes, it is a Chinese company.

It is developed by a Chinese AI research team and has gained traction for its advancements in AI, positioning itself as a strong competitor in the global AI space.

Is Deepseek AI free?

The AI version offers both free and paid versions.

Users can access a basic version of it for free, but more advanced features and usage may require a paid subscription or premium plan.

Is Deepseek AI good?

Yes, it is considered highly effective.

It has been praised for its fast response times, accuracy in generating human-like text, and competitive performance compared to other AI models like ChatGPT. However, like any AI, its effectiveness depends on the use case and specific tasks.

How does DeepSeek Coder compare to other coding assistants?

DeepSeek Coder is a specialised version trained on a vast code and natural language dataset. It outperforms many existing open-source code models on various benchmarks and supports over 338 programming languages. It’s particularly effective for task completion, debugging, and unit test generation.